New Delhi, July 2025.

At Meta, we work to protect young

people from both direct and indirect harm, from Teen Accounts, which are

designed to give teens age-appropriate experiences and prevent unwanted

contact, to our sophisticated technology that finds and removes exploitative

content.

Today, we’re announcing a range of

updates to bolster these efforts, and we’re sharing new data on the impact of

our latest safety tools.

Protecting Teens from Potentially

Unsafe or Unwanted Contact

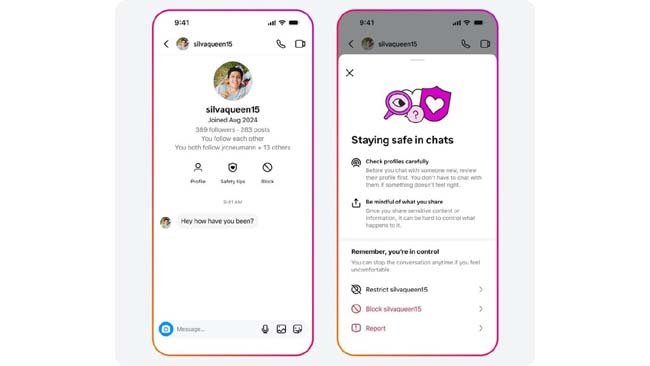

We’ve added new safety features to

DMs in Teen Accounts to give teens more context about the accounts they’re

messaging and help them spot potential scammers. Now, teens will see new

options to view safety tips and block an account. They’ll also be able to see

the month and year the account joined Instagram, prominently displayed at the

top of new chats.

We’ve also launched a new block and

report option in DMs, so that people can take both actions together. While

we've always encouraged people to both block and report, this new combined

option will make this process easier, and help make sure potential violator

accounts are reported to us, so we can review and take action.

These new features complement the

safety notices we show to remind people to be cautious in private messages and

to block and report anything that makes them uncomfortable - and we're

encouraged to see teens responding to them. In June alone, they blocked times

and reported another 1 million after seeing a safety notice.

In June, teens and young adults also

saw our new Location Notice on Instagram 1 million times. This notice lets

people know when they’re chatting with someone who may be in a different

country, and is designed to help protect people from potential sextortion

scammers who often misrepresent where they live. Over 10% tapped on the notice

to learn more about the steps they could take.

Since rolling out our nudity

protection feature globally, 99% of people - including teens - have kept it turned

on, and in June, over 40% of blurred images received in DMs stayed blurred,

significantly reducing exposure to unwanted nudity. Nudity protection, on by

default for teens, also encourages

people to think twice before forwarding suspected nude images, and in May

people decided against forwarding around 45% of the time after seeing this

warning.

Strengthening Protections for

Adult-Managed Accounts Primarily Featuring Children

We’re also strengthening our

protections for accounts run by adults that primarily feature children. These

include adults who regularly share photos and videos of their children, and

adults - such as parents or talent managers - who run accounts that represent

teens or children under 13. While you have to be at least 13 to use Instagram,

we allow adults to run accounts representing children under 13 if it’s clear in

the account bio that they manage the account. If we become aware that the

account is being run by the child themselves, we’ll remove it.

While these accounts are overwhelmingly

used in benign ways, unfortunately there are people who may try to abuse them,

leaving sexualized comments under their posts or asking for sexual images in

DMs, in clear violation of our rules. Today we’re announcing steps to help

prevent this abuse.

First, we’re extending some Teen

Account protections to adult-managed accounts that primarily feature children.

These include automatically placing these accounts into our strictest message

settings to prevent unwanted messages, and turning on Hidden Words, which

filters offensive comments. We’ll show these accounts a notification at the top

of their Instagram Feed, letting them know we’ve updated their safety settings,

and prompting them to review their account privacy settings too. These changes

will roll out in the coming months.

We also want to prevent potentially

suspicious adults, for example adults who have been blocked by teens, from

finding these accounts in the first place. We’ll avoid recommending them to

potentially suspicious adults and vice versa, make it harder for them to find

each other in Search and hide comments from potentially suspicious adults on

their posts. This builds on last year’s update to stop allowing accounts

primarily featuring children to offer subscriptions or receive gifts.

Taking Action on Harmful Accounts

Across the Internet

In addition to these new protections,

we’re also continuing to take aggressive action on accounts that break our

rules. Earlier this year, our specialist teams removed nearly 135,000 Instagram

accounts for leaving sexualized comments or requesting sexual images from

adult-managed accounts featuring children under 13. We also removed an

additional 500,000 Facebook and Instagram accounts that were linked to those

original accounts. We let people know that we’d removed an account that had

interacted inappropriately with their content, encouraging them to be cautious

and to block and report.

People who seek to exploit children

don’t limit themselves to any one platform, which is why we also shared information

about these accounts with other tech companies through the Tech Coalition’s

Lantern program.